Welcome to UCLA’s Teaching and Learning Center

We foster and champion effective teaching, grounded in sound pedagogy and enhanced by innovation, to promote successful learning for our diverse student population.

To realize this mission, we collaborate with faculty and other campus partners, drawing on our wide range of expertise in pedagogy, educational technology, assessment, learning spaces, and curricular research.

News

https://teaching.ucla.edu/wp-content/uploads/2023/07/Noema_AI_FINAL.jpg

1186

947

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-07-31 12:52:282023-07-31 12:52:28UCLA Writing Programs Professor Laura Hartenberger shares her thoughts on ChatGPT

https://teaching.ucla.edu/wp-content/uploads/2023/07/Noema_AI_FINAL.jpg

1186

947

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

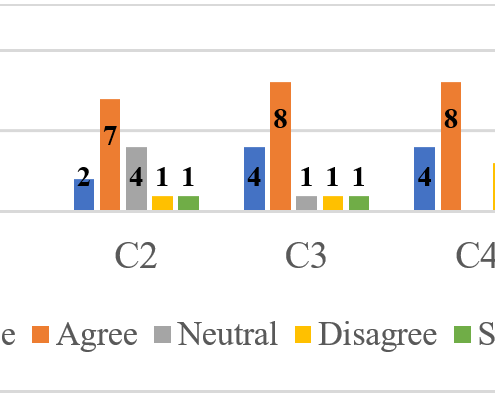

michelle chen2023-07-31 12:52:282023-07-31 12:52:28UCLA Writing Programs Professor Laura Hartenberger shares her thoughts on ChatGPT https://teaching.ucla.edu/wp-content/uploads/2023/07/GE-FSI-chart.png

351

468

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

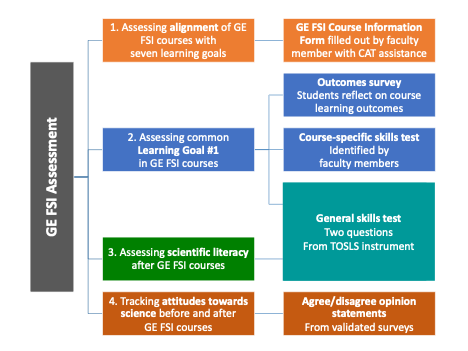

michelle chen2023-07-17 13:17:492023-07-17 13:17:49Recent Publication Shares Framework and Results from CAT’s Assessment of the General Education Foundations of Scientific Inquiry (GE FSI) Program

https://teaching.ucla.edu/wp-content/uploads/2023/07/GE-FSI-chart.png

351

468

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-07-17 13:17:492023-07-17 13:17:49Recent Publication Shares Framework and Results from CAT’s Assessment of the General Education Foundations of Scientific Inquiry (GE FSI) Program https://teaching.ucla.edu/wp-content/uploads/2023/06/Screen-Shot-2023-06-26-at-3.17.35-PM-1.png

930

930

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

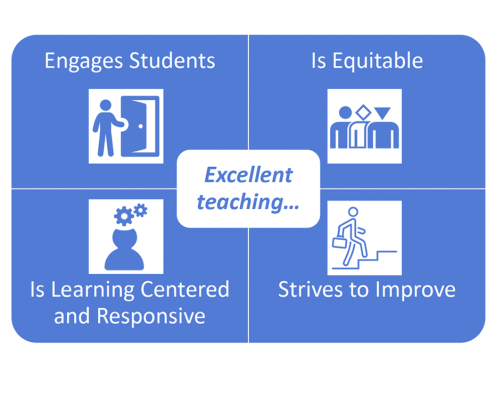

michelle chen2023-06-30 15:08:542023-07-05 13:33:47UCLA’s Holistic Evaluation of Teaching Program Congratulates Cohort 1, Welcomes Cohort 2

https://teaching.ucla.edu/wp-content/uploads/2023/06/Screen-Shot-2023-06-26-at-3.17.35-PM-1.png

930

930

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-06-30 15:08:542023-07-05 13:33:47UCLA’s Holistic Evaluation of Teaching Program Congratulates Cohort 1, Welcomes Cohort 2 https://teaching.ucla.edu/wp-content/uploads/2023/06/AL5-1-scaled.jpg

2560

2560

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-06-30 15:04:182023-07-05 13:33:20UCLA’s Holistic Evaluation of Teaching Program Showcased at National Summit

https://teaching.ucla.edu/wp-content/uploads/2023/06/AL5-1-scaled.jpg

2560

2560

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-06-30 15:04:182023-07-05 13:33:20UCLA’s Holistic Evaluation of Teaching Program Showcased at National Summit https://teaching.ucla.edu/wp-content/uploads/2023/06/Picture1.png

681

1430

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-06-01 14:19:172023-06-01 14:19:17Assessing the Impact of Diversity Instruction in STEM

https://teaching.ucla.edu/wp-content/uploads/2023/06/Picture1.png

681

1430

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-06-01 14:19:172023-06-01 14:19:17Assessing the Impact of Diversity Instruction in STEM https://teaching.ucla.edu/wp-content/uploads/2023/05/Picture1.jpg

702

1188

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

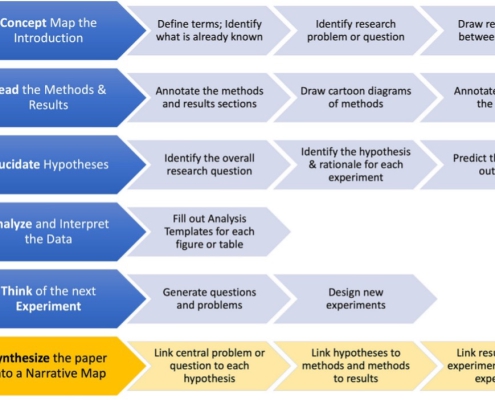

michelle chen2023-05-25 16:24:192023-05-25 16:25:29Fostering Scientific Literacy Through Synthesis: the CREATE(S) Process

https://teaching.ucla.edu/wp-content/uploads/2023/05/Picture1.jpg

702

1188

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2023-05-25 16:24:192023-05-25 16:25:29Fostering Scientific Literacy Through Synthesis: the CREATE(S) Process https://teaching.ucla.edu/wp-content/uploads/2020/06/adrienne_lavine_2019_12_16.jpg

200

160

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-10-26 16:25:352022-10-26 16:25:35Celebrating Adrienne Lavine’s Five Years of Stellar Service as Associate Vice Provost for CAT

https://teaching.ucla.edu/wp-content/uploads/2020/06/adrienne_lavine_2019_12_16.jpg

200

160

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-10-26 16:25:352022-10-26 16:25:35Celebrating Adrienne Lavine’s Five Years of Stellar Service as Associate Vice Provost for CAT https://teaching.ucla.edu/wp-content/uploads/2022/10/2020dta_TA.png

268

350

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-10-04 12:45:352022-10-04 12:46:29Highlighting the 2020 Distinguished Teaching Assistants

https://teaching.ucla.edu/wp-content/uploads/2022/10/2020dta_TA.png

268

350

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-10-04 12:45:352022-10-04 12:46:29Highlighting the 2020 Distinguished Teaching Assistants https://teaching.ucla.edu/wp-content/uploads/2022/09/Confidence-pre-course-survey.png

396

1070

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2022-09-19 15:26:432022-09-19 16:18:16Adding Genuine Research to a New Genetics Lab Class IIP Preliminary Summary

https://teaching.ucla.edu/wp-content/uploads/2022/09/Confidence-pre-course-survey.png

396

1070

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2022-09-19 15:26:432022-09-19 16:18:16Adding Genuine Research to a New Genetics Lab Class IIP Preliminary Summary https://teaching.ucla.edu/wp-content/uploads/2022/09/IDP_Blue_Room.png

697

1143

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2022-09-15 10:45:152022-09-19 16:12:07Video-based Child Observation Course Integration Initiative IIP Preliminary Summary Summer 2022

https://teaching.ucla.edu/wp-content/uploads/2022/09/IDP_Blue_Room.png

697

1143

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2022-09-15 10:45:152022-09-19 16:12:07Video-based Child Observation Course Integration Initiative IIP Preliminary Summary Summer 2022 https://teaching.ucla.edu/wp-content/uploads/2022/08/2020dta_non-senate.png

268

350

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-08-31 14:21:482022-08-31 14:21:48Highlighting the 2020 Distinguished Teaching Non-Senate Faculty

https://teaching.ucla.edu/wp-content/uploads/2022/08/2020dta_non-senate.png

268

350

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-08-31 14:21:482022-08-31 14:21:48Highlighting the 2020 Distinguished Teaching Non-Senate Faculty https://teaching.ucla.edu/wp-content/uploads/2022/08/2020dta_senate.jpg

268

350

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-08-04 16:08:562022-08-04 16:08:56Highlighting the 2020 Distinguished Teaching Senate Faculty

https://teaching.ucla.edu/wp-content/uploads/2022/08/2020dta_senate.jpg

268

350

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-08-04 16:08:562022-08-04 16:08:56Highlighting the 2020 Distinguished Teaching Senate Faculty https://teaching.ucla.edu/wp-content/uploads/2022/06/RSTE-Publication.jpg

1953

1371

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2022-06-27 16:33:152022-06-27 16:33:53Scaling Up a Life Sciences College Career Exploration Course to Foster STEM Confidence and Career Self-Efficacy

https://teaching.ucla.edu/wp-content/uploads/2022/06/RSTE-Publication.jpg

1953

1371

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2022-06-27 16:33:152022-06-27 16:33:53Scaling Up a Life Sciences College Career Exploration Course to Foster STEM Confidence and Career Self-Efficacy https://teaching.ucla.edu/wp-content/uploads/2022/06/HHMI-Pic-1-scaled.jpg

1710

2560

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-06-06 10:51:382022-06-06 11:22:22CEA project “All Bruins Belong” highlighted in UCLA Newsroom article

https://teaching.ucla.edu/wp-content/uploads/2022/06/HHMI-Pic-1-scaled.jpg

1710

2560

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-06-06 10:51:382022-06-06 11:22:22CEA project “All Bruins Belong” highlighted in UCLA Newsroom article

Research Shows How UCLA’s PAROSL Program Helps Faculty Shift Their Thinking Towards Student Learning

https://teaching.ucla.edu/wp-content/uploads/2022/04/CI3I7798.jpg

690

1400

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-04-26 16:45:262022-04-26 16:45:26Senior Survey Introduces Custom Questions About Academic Experiences

https://teaching.ucla.edu/wp-content/uploads/2022/04/CI3I7798.jpg

690

1400

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-04-26 16:45:262022-04-26 16:45:26Senior Survey Introduces Custom Questions About Academic Experiences https://teaching.ucla.edu/wp-content/uploads/2022/01/glory.png

774

1016

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

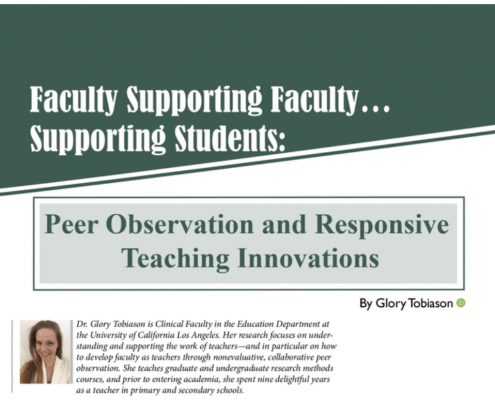

michelle chen2022-01-04 15:46:322022-01-04 15:57:55Two Publications Document How UCLA’s PAROSL Program Supports Inclusive, Student-Centered Pedagogy

https://teaching.ucla.edu/wp-content/uploads/2022/01/glory.png

774

1016

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2022-01-04 15:46:322022-01-04 15:57:55Two Publications Document How UCLA’s PAROSL Program Supports Inclusive, Student-Centered Pedagogy https://teaching.ucla.edu/wp-content/uploads/2021/08/Screen-Shot-2021-08-10-at-3.43.02-PM-1.png

900

900

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

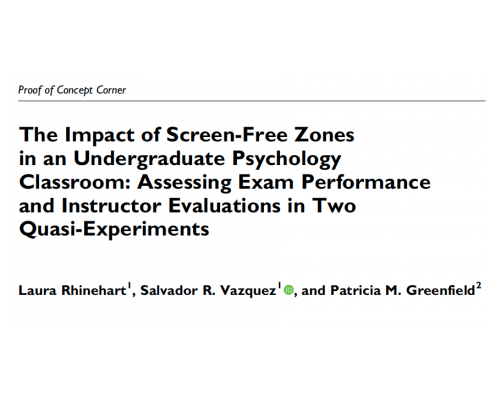

michelle chen2021-08-10 15:35:012021-08-10 15:51:36Summary of "The impact of screen-free zones in an undergraduate psychology classroom: Assessing exam performance and instructor evaluations in two quasi-experiments"

https://teaching.ucla.edu/wp-content/uploads/2021/08/Screen-Shot-2021-08-10-at-3.43.02-PM-1.png

900

900

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2021-08-10 15:35:012021-08-10 15:51:36Summary of "The impact of screen-free zones in an undergraduate psychology classroom: Assessing exam performance and instructor evaluations in two quasi-experiments" https://teaching.ucla.edu/wp-content/uploads/2021/08/URW_JoeBruin-scaled.jpg

1709

2560

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2021-08-09 16:49:172021-08-10 09:52:08Research Collaboration Highlights the Role of Undergraduate Research in Building Career-Related Skills for Humanities and Social Science Majors

https://teaching.ucla.edu/wp-content/uploads/2021/08/URW_JoeBruin-scaled.jpg

1709

2560

Anita Han

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

Anita Han2021-08-09 16:49:172021-08-10 09:52:08Research Collaboration Highlights the Role of Undergraduate Research in Building Career-Related Skills for Humanities and Social Science Majors https://teaching.ucla.edu/wp-content/uploads/2020/09/library-steps.jpg

1080

1080

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2021-06-18 15:07:362021-06-18 15:09:49Center for Educational Assessment Receives Racial and Social Justice Grant to Increase the Sense of Belonging within the UCLA Community

https://teaching.ucla.edu/wp-content/uploads/2020/09/library-steps.jpg

1080

1080

michelle chen

https://teaching.ucla.edu/wp-content/uploads/2020/07/Uxd_Blk_AdvOfTeaching_B-copy-1-455x200.png

michelle chen2021-06-18 15:07:362021-06-18 15:09:49Center for Educational Assessment Receives Racial and Social Justice Grant to Increase the Sense of Belonging within the UCLA Community